System Technical Specifications

Hardware Details for Midway3

Midway3 System Overview

Midway3 is the latest high performance computing (HPC) cluster built, deployed and maintained by the Research Computing Center. Midway3 features a heterogeneous collection of the latest in HPC technologies, including Intel Cascade Lake and AMD EPYC Rome processors, HDR InfiniBand interconnect, NVIDIA V100, A100 and RTX 6000 GPUs, and SSD storage controllers for more performant small file I/O. There are two big memory nodes available to all users. The big memory nodes have the Standard Intel Compute Node specifications but with larger memory configurations.

The Intel specific hardware resources have the following key features:

- 222 nodes total (10,560 cores)

- 210 standard compute nodes

- 2 big memory nodes

- 11 GPU nodes (5 RTX6000, 5 V100, 1 A100)

- All nodes have HDR InfiniBand (100 Gbps) network cards.

- Each node has 960 GB SSD local disk

- Operating System throughout the cluster is CentOS 8

Compute Nodes

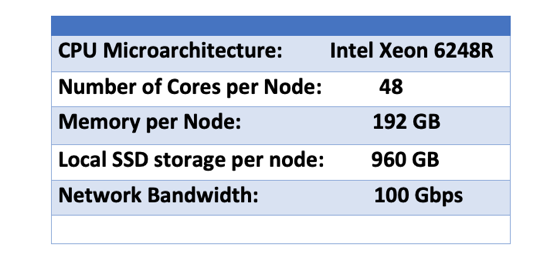

There are a total of 210 standard Intel Cascade Lake CPU-only nodes available to all users.

Each node has the following base components:

- 2x Intel Xeon Gold 6248R (48 cores per node)

- 192 GB of conventional memory

- HDR InfiniBand (100 Gbps) network card

Storage

Distributed file system storage: 2.2 PB of high performance GPFS storage

Important News

Midway/Midway2 storage is NOT mounted to the Midway3 cluster.

Post-Production Work

The RCC intends to mount the storage of Midway2 to Midway3 and vice-versa. This integration work will at times impact the Midway3 production phase for short periods. The RCC will provide advance notice of the anticipated system maintenance periods, so users can plan accordingly.

Storage Options

The Midway3 system was deployed as a stand-alone system with its own storage because it was a complete re-architecture of the core Midway building block. While the RCC is working hard to have the storage system of Midway2 be accessible from Midway3 and vice versa, the RCC put in place an option to allocate temporary storage on either system as long as the sum of the used storage does not exceed the PI’s CPP storage plus any RCC allocated storage.

- For example, if a PI has 100 TB of CPP storage on Midway2 and 30 TB is unused, a temporary quota of up to 30 TB can be allocated on Midway3. Once the RCC completes the cross-mounting of the storage of the two systems, the data will be placed back to the original CPP storage location. All new storage will continue to reside on Midway3. The transition will be seamless to the users and will not impact running jobs on either system.

- If the total usages from the two storage systems exceed the soft quota (100TB combined from all available allocations), the system will initiate a 30-day countdown grace period until the usage drops below the quota. If the grace period expires, or the total usage exceeds the hard quota (101TB combined), both storage spaces (Midway2 and Midway3) will be changed to read-only, until the usage drops below the soft quota.

- A transfer of Midway2 CPP storage to Midway3 CPP is not permissible.

All PIs are eligible to purchase high-capacity storage on Midway3. For assistance, please contact us at help@rcc.uchicago.edu

Network

HDR (100 Gbps) Infiniband fabric between nodes and storage in a fat tree network topology.

GPU Card Specs

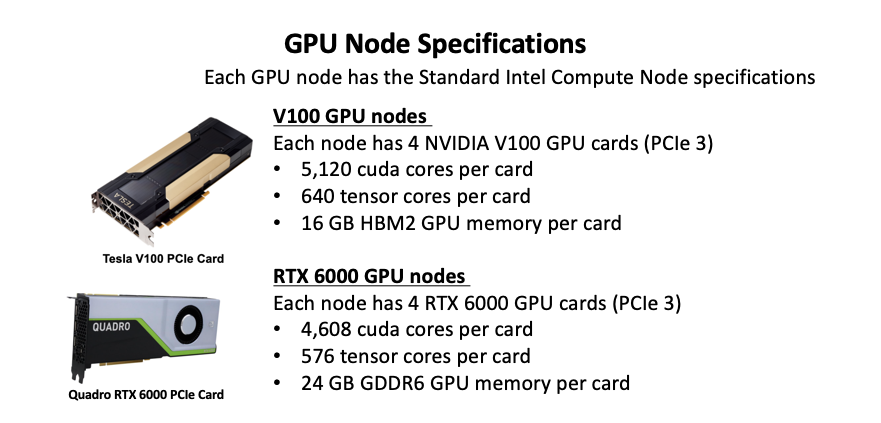

There are 11 GPU nodes that are part of the Midway3 communal resources. Each GPU node has the Standard Intel Compute Node specifications and the following GPU configurations:

- 5 GPU nodes w/ 4x NVIDIA V100 GPUs per node

- 5 GPU nodes w/ 4x NVIDIA Quadro RTX 6000 GPUs per node

- 1 GPU node w/ 4x NVIDIA A100 GPUs per node